The New York Times is suing Microsoft and OpenAI for billions of dollars over copyright infringement, alleging that the powerful technology companies used its information to train their artificial intelligence models and to “free-ride”.

The use of its data without permission or compensation undermined its business model and threatened independent journalism “vital to our democracy”, the media organisation said in documents filed with a federal court of Manhattan.

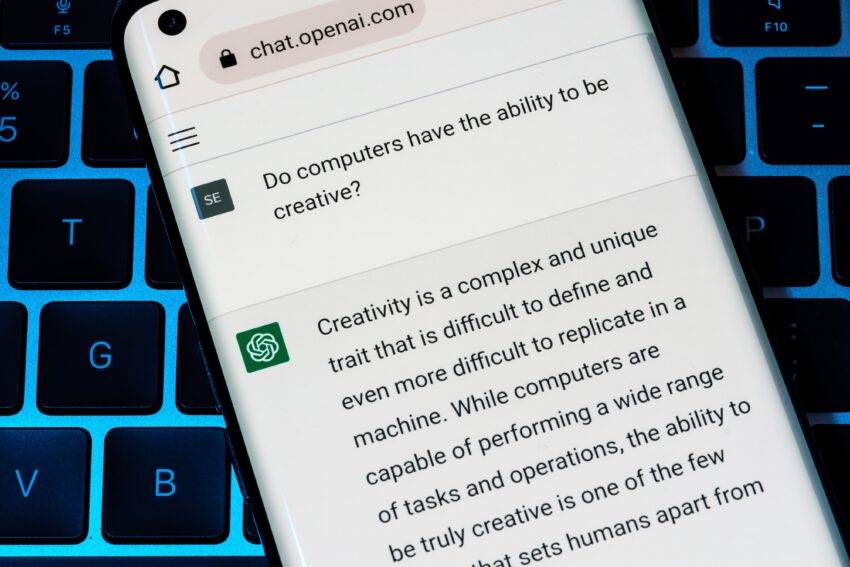

The lawsuit has laid bare the controversial use by technology companies of accurate and high-quality data provided by content creators, such as journalists, which are needed to power the “large-language models” that form the backbone of generative artificial intelligence.

This case will be watched closely by other parties in the creative industries concerned that their intellectual property is being breached. Some critics claim that the word “train” — used to refer to the data that is collected to fuel AI — is Silicon Valley spin and that a more appropriate word is “scrape”.

A series of copyright lawsuits have been filed by authors and artists against OpenAI and other tech companies in the US. They include the comic Sarah Silverman and Pulitzer prize-winning novelist Michael Chabon. However elements of those claims have been rejected by judges because they failed to prove that identical material had been reproduced by the AI, unlike the NYT which appears to have proved facsimile use.

Dr Andres Guadamuz, a reader in intellectual property law at the University of Sussex, who has been following the cases, said the newspaper’s filing appeared more solid and was a “negotiating tactic” after the Springer deal had established value in news content.

“It is probably one of the strongest cases so far. They have managed to get some outputs that appear to be an entire replication of the source material of the inputs. And that is a big deal. A lot of cases have been dismissed due to the fact that a lot of the lawsuits have not been able to show infringing outputs,” he said.

The New York Times claims it can demonstrate facsimile use of its content. In a list of examples, it set out how the chatbot could recite significant portions of the publisher’s work verbatim, including the text of in-depth investigations that ChatGPT could not have found elsewhere, accurately mimicking its style.

For example, it allegedly could quote its restaurant critics from reviews they had written for the media group. A request to the chatbot to type out the start of a New York Times piece “because I’m being paywalled out of reading the New York Times’s article”, prompted the response “Certainly! Here’s the first paragraph”, demonstrating how the chatbot could be used to avoid paying for the content, the company claimed.

The New York Times found that the chatbot also would invent copy in its own style, purporting to be by one of its journalists. In response to a query requesting a portion of a New York Times article, it found that “Bing Chat completely fabricated a paragraph, including specific quotes … that appear nowhere in The Times article in question or anywhere else on the internet”.

The use of its data to finesse the AI models was financially motivated, the publisher claimed. “Microsoft’s deployment of Times-trained AI throughout its product line helped to boost its market capitalisation by a trillion dollars in the past year alone. And OpenAI’s release of ChatGPT has driven its valuation to as high as $90 billion,” it said.

Some media organisations, including Axel Springer, the German multinational media group that publishes Politico, Bild and Insider, and the Associated Press, the news agency, have sought to do commercial deals with OpenAI to license their content. Others such as the BBC, The Guardian and Lonely Planet have stopped the AI company from scraping the content on their websites.

The New York Times said it had tried and failed to negotiate with the technology companies, disputing that their content fell under the argument of “fair use”.

The row also demonstrates how the traditional internet search model has been upended. Where users were directed to company websites so that businesses did not miss out on revenue from visitors, with chatbots responses can be instantaneous. They also can be unsourced and inaccurate.